ALL BUSINESS

COMIDA

DIRECTORIES

ENTERTAINMENT

FINER THINGS

FREE CREATOR TOOLS

HEALTH

MARKETPLACE

MEMBER's ONLY

MONEY MATTER$

MOTIVATIONAL

NEWS & WEATHER

TECHNOLOGIA

TV NETWORKS

VIDEOS

VOTE USA 2026/2028

INVESTOR RELATIONS

ALL BUSINESS

COMIDA

DIRECTORIES

ENTERTAINMENT

FINER THINGS

FREE CREATOR TOOLS

HEALTH

MARKETPLACE

MEMBER's ONLY

MONEY MATTER$

MOTIVATIONAL

NEWS & WEATHER

TECHNOLOGIA

TV NETWORKS

VIDEOS

VOTE USA 2026/2028

INVESTOR RELATIONS

Posted by - Latinos MediaSyndication -

on - April 6, 2023 -

Filed in - Marketing -

-

493 Views - 0 Comments - 0 Likes - 0 Reviews

This article was co-authored by Andrew Ansley.

Things, not strings. If you haven’t heard this before, it comes from a famous Google blog post that announced the Knowledge Graph.

The announcement’s 11th anniversary is only a month away, yet many still struggle to understand what “things, not strings” really means for SEO.

The quote is an attempt to convey that Google understands things and is no longer a simple keyword detection algorithm.

In May 2012, one could argue that entity SEO was born. Google’s machine learning, aided by semi-structured and structured knowledge bases, could understand the meaning behind a keyword.

The ambiguous nature of language finally had a long-term solution.

So if entities have been important for Google for over a decade, why are SEOs still confused about entities?

Good question. I see four reasons:

This article is a solution to all four problems that have prevented SEOs from fully mastering an entity-based approach to SEO.

By reading this, you’ll learn:

Entity SEO is the future of where search engines are headed with regard to choosing what content to rank and determining its meaning.

Combine this with knowledge-based trust, and I believe that entity SEO will be the future of how SEO is done in the next two years.

Examples of entitiesSo how do you recognize an entity?

The SERP has several examples of entities that you’ve likely seen.

The most common types of entities are related to locations, people, or businesses.

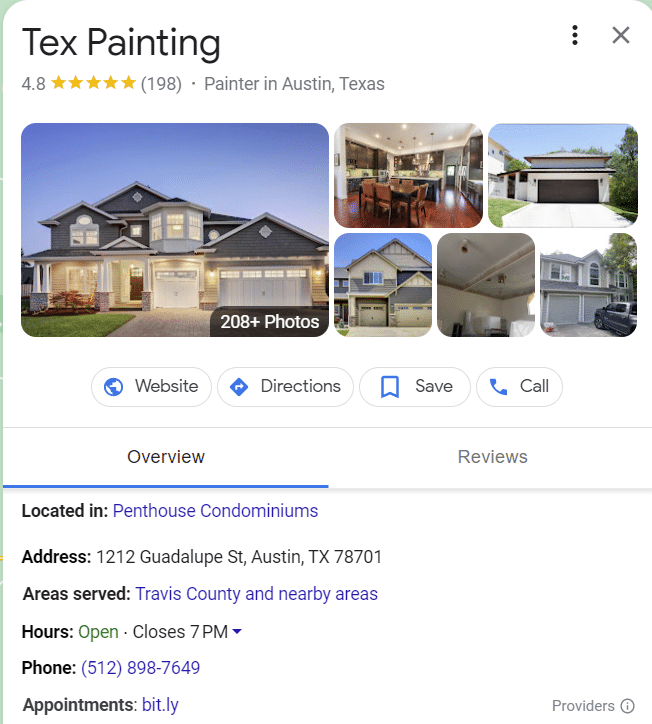

Google Business Profile

Google Business Profile

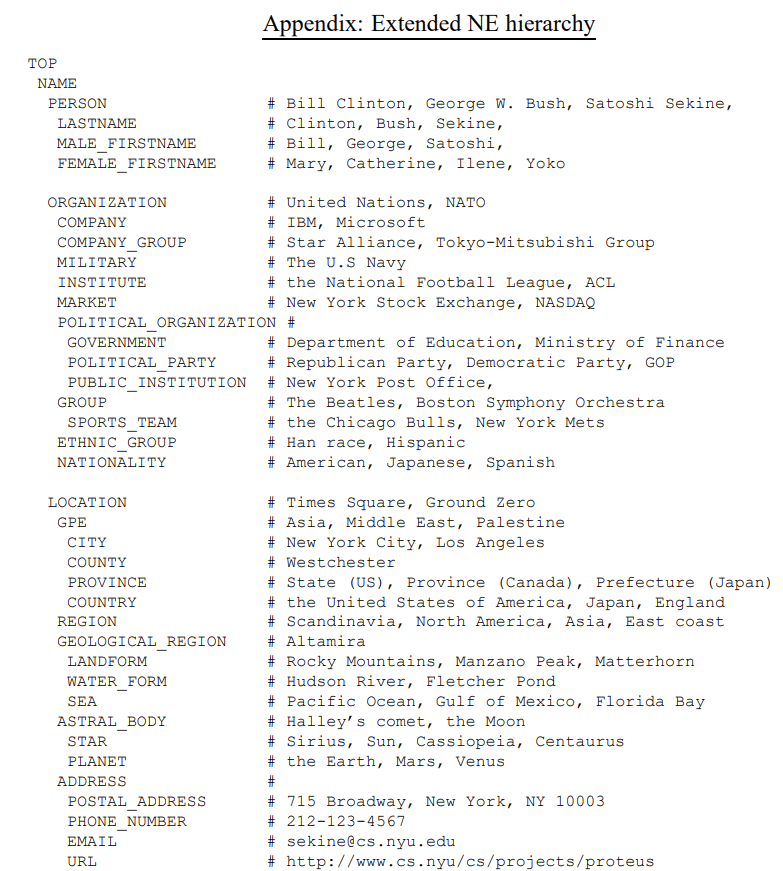

Google image search

Google image search

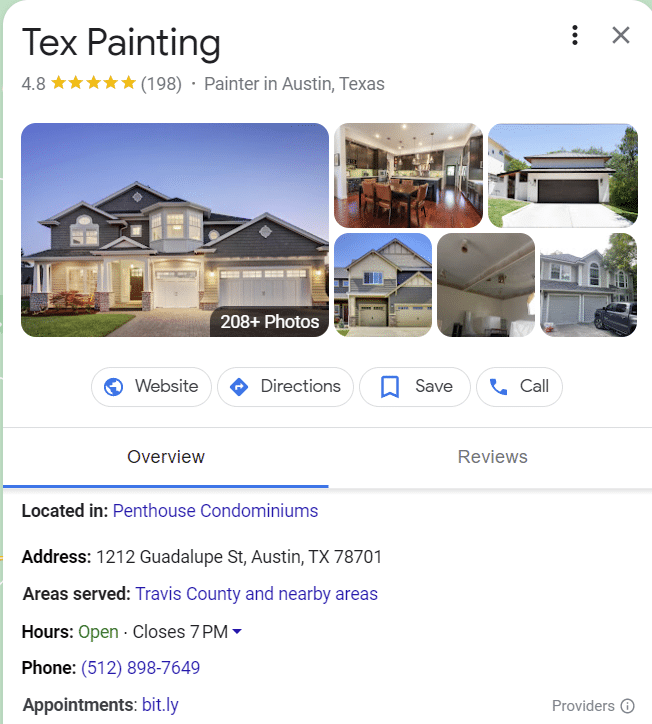

Knowledge Panel

Knowledge Panel

Perhaps the best example of entities in the SERP is intent clusters. The more a topic is understood, the more these search features emerge.

Interestingly enough, a single SEO campaign can alter the face of the SERP when you know how to execute entity-focused SEO campaigns.

Wikipedia entries are another example of entities. Wikipedia provides a great example of information associated with entities.

As you can see from the top left, the entity has all sorts of attributes associated with “fish,” ranging from its anatomy to its importance to humans.

While Wikipedia contains many data points on a topic, it is by no means exhaustive.

What is an entity?An entity is a uniquely identifiable object or thing characterized by its name(s), type(s), attributes, and relationships to other entities. An entity is only considered to exist when it exists in an entity catalog.

Entity catalogs assign a unique ID to each entity. My agency has programmatic solutions that use the unique ID associated with each entity (services, products, and brands are all included).

If a word or phrase is not inside an existing catalog, it does not mean that the word or phrase is not an entity, but you can typically know whether something is an entity by its existence in the catalog.

It is important to note that Wikipedia is not the deciding factor on whether something is an entity, but the company is most well-known for its database of entities.

Any catalog can be used when talking about entities. Typically, an entity is a person, place, or thing, but ideas and concepts can also be included.

Some examples of entity catalogs include:

Entities help to bridge the gap between the worlds of unstructured and structured data.

They can be used to semantically enrich unstructured text, while textual sources may be utilized to populate structured knowledge bases.

Recognizing mentions of entities in text and associating these mentions with the corresponding entries in a knowledge base is known as the task of entity linking.

Entities allow for a better understanding of the meaning of text, both for humans and for machines.

While humans can relatively easily resolve the ambiguity of entities based on the context in which they are mentioned, this presents many difficulties and challenges for machines.

The knowledge base entry of an entity summarizes what we know about that entity.

As the world is constantly changing, so are new facts surfacing. Keeping up with these changes requires a continuous effort from editors and content managers. This is a demanding task at scale.

By analyzing the contents of documents in which entities are mentioned, the process of finding new facts or facts that need updating may be supported or even fully automated.

Scientists refer to this as the problem of knowledge base population, which is why entity linking is important.

Entities facilitate a semantic understanding of the user’s information need, as expressed by the keyword query, and the document’s content. Entities thus may be used to improve query and/or document representations.

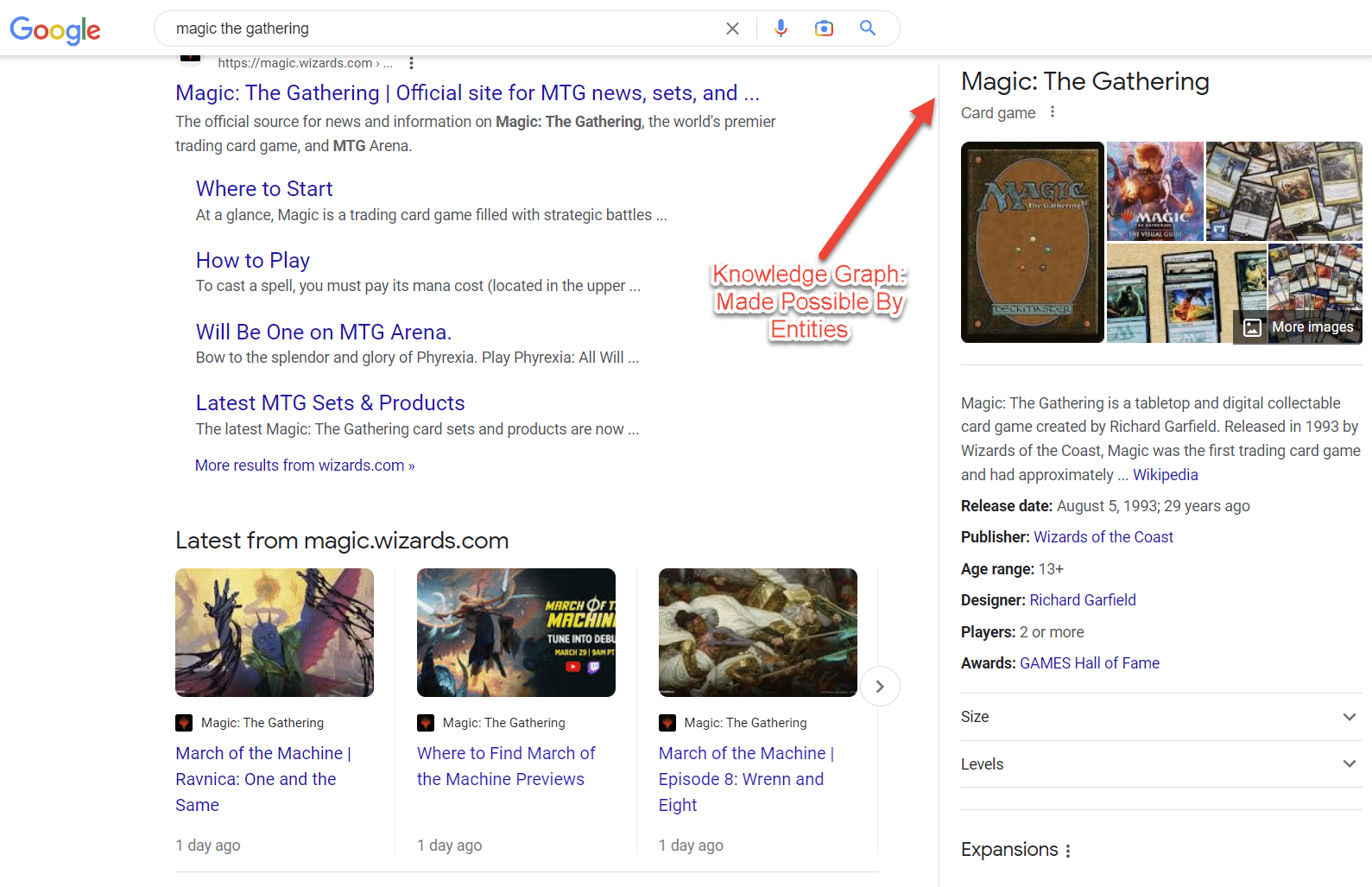

In the Extended Named Entity research paper, the author identifies around 160 entity types. Here are two of seven screenshots from the list.

1/7 entity types

1/7 entity types

3/7 entity types

3/7 entity types

Certain categories of entities are more easily defined, but it’s important to remember that concepts and ideas are entities. Those two categories are very difficult for Google to scale on its own.

You can’t teach Google with just a single page when working with vague concepts. Entity understanding requires many articles and many references sustained over time.

Google’s history with entitiesOn July 16, 2010, Google purchased Freebase. This purchase was the first major step that led to the current entity search system.

After investing in Freebase, Google realized that Wikidata had a better solution. Google then worked to merge Freebase into Wikidata, a task that was far more difficult than expected.

Five Google scientists wrote a paper titled “From Freebase to Wikidata: The Great Migration.” Key takeaways include.

“Freebase is built on the notions of objects, facts, types, and properties. Each Freebase object has a stable identifier called a “mid” (for Machine ID).”

“Wikidata’s data model relies on the notions of item and statement. An item represents an entity, has a stable identifier called “qid”, and may have labels, descriptions, and aliases in multiple languages; further statements and links to pages about the entity in other Wikimedia projects – most prominently Wikipedia. Contrary to Freebase, Wikidata statements do not aim to encode true facts, but claims from different sources, which can also contradict each other…”

Entities are defined in these knowledge bases, but Google still had to build its entity knowledge for unstructured data (i.e., blogs).

Google partnered with Bing and Yahoo and created Schema.org to accomplish this task.

Google provides schema directions so website managers can have tools that help Google understand the content. Remember, Google wants to focus on things, not strings.

In Google’s words:

“You can help us by providing explicit clues about the meaning of a page to Google by including structured data on the page. Structured data is a standardized format for providing information about a page and classifying the page content; for example, on a recipe page, what are the ingredients, the cooking time and temperature, the calories, and so on.”

Google continues by saying:

“You must include all the required properties for an object to be eligible for appearance in Google Search with enhanced display. In general, defining more recommended features can make it more likely that your information can appear in Search results with enhanced display. However, it is more important to supply fewer but complete and accurate recommended properties rather than trying to provide every possible recommended property with less complete, badly-formed, or inaccurate data.”

More could be said about schema, but suffice it to say schema is an incredible tool for SEOs looking to make page content clear to search engines.

The last piece of the puzzle comes from Google’s blog announcement titled “Improving Search for The Next 20 Years.”

Document relevance and quality are the main ideas behind this announcement. The first method Google used for determining the content of a page was entirely focused on keywords.

Google then added topic layers to search. This layer was made possible by knowledge graphs and by systematically scraping and structuring data across the web.

That brings us to the current search system. Google went from 570 million entities and 18 billion facts to 800 billion facts and 8 billion entities in less than 10 years. As this number grows, entity search improves.

How is the entity model an improvement from previous search models?Traditional keyword-based information retrieval (IR) models have an inherent limitation of not being able to retrieve (relevant) documents that have no explicit term matches with the query.

If you use ctrl + f to find text on a page, you use something similar to the traditional keyword-based information retrieval model.

An insane amount of data is published on the web every day.

It simply isn’t feasible for Google to understand the meaning of every word, every paragraph, every article, and every website.

Instead, entities provide a structure from which Google can minimize the computational load while improving understanding.

“Concept-based retrieval methods attempt to tackle this challenge by relying on auxiliary structures to obtain semantic representations of queries and documents in a higher-level concept space. Such structures include controlled vocabularies (dictionaries and thesauri), ontologies, and entities from a knowledge repository.”

– Entity-Oriented Search, Chapter 8.3

Krisztian Balog, who wrote the definitive book on entities, identifies three possible solutions to the traditional information retrieval model.

The goal of these three approaches is to gain a richer representation of the user’s information needed by identifying entities strongly related to the query.

Balog then identifies six algorithms associated with projection-based methods of entity mapping (projection methods relate to converting entities into three-dimensional space and measuring vectors using geometry).

Here is what Balog writes:

“A total of four attention features are designed, which are extracted for each query entity. Entity ambiguity features are meant to characterize the risk associated with an entity annotation. These are: (1) the entropy of the probability of the surface form being linked to different entities (e.g., in Wikipedia), (2) whether the annotated entity is the most popular sense of the surface form (i.e., has the highest commonness score, and (3) the difference in commonness scores between the most likely and second most likely candidates for the given surface form. The fourth feature is closeness, which is defined as the cosine similarity between the query entity and the query in an embedding space. Specifically, a joint entity-term embedding is trained using the skip-gram model on a corpus, where entity mentions are replaced with the corresponding entity identifiers. The query’s embedding is taken to be the centroid of the query terms’ embeddings.”

For now, it is important to have surface-level familiarity with these six entity-centric algorithms.

The main takeaway is that two approaches exist: projecting documents to a latent entity layer and explicit entity annotations of documents.

Three types of data structures

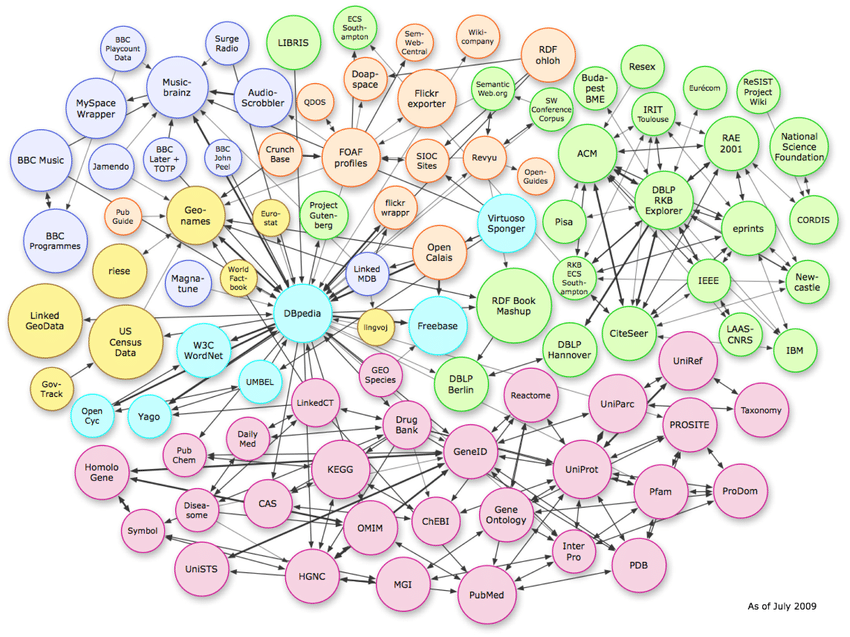

The image above shows the complex relationships that exist in vector space. While the example shows knowledge graph connections, this same pattern can be replicated on a page-by-page schema level.

To understand entities, it is important to know the three types of data structures that algorithms use.