ALL BUSINESS

COMIDA

DIRECTORIES

ENTERTAINMENT

FINER THINGS

FREE CREATOR TOOLS

HEALTH

MARKETPLACE

MEMBER's ONLY

MONEY MATTER$

MOTIVATIONAL

NEWS & WEATHER

TECHNOLOGIA

TV NETWORKS

VIDEOS

VOTE USA 2026/2028

INVESTOR RELATIONS

ALL BUSINESS

COMIDA

DIRECTORIES

ENTERTAINMENT

FINER THINGS

FREE CREATOR TOOLS

HEALTH

MARKETPLACE

MEMBER's ONLY

MONEY MATTER$

MOTIVATIONAL

NEWS & WEATHER

TECHNOLOGIA

TV NETWORKS

VIDEOS

VOTE USA 2026/2028

INVESTOR RELATIONS

Posted by - Latinos MediaSyndication -

on - April 12, 2023 -

Filed in - Marketing -

-

335 Views - 0 Comments - 0 Likes - 0 Reviews

Python is a powerful programming language that has gained popularity in the SEO industry over the past few years.

With its relatively simple syntax, efficient performance and abundance of libraries and frameworks, Python has revolutionized how many SEOs approach their work.

Python offers a versatile toolset that can help make the optimization process faster, more accurate and more effective.

This article explores five Python scripts to help boost your SEO efforts.

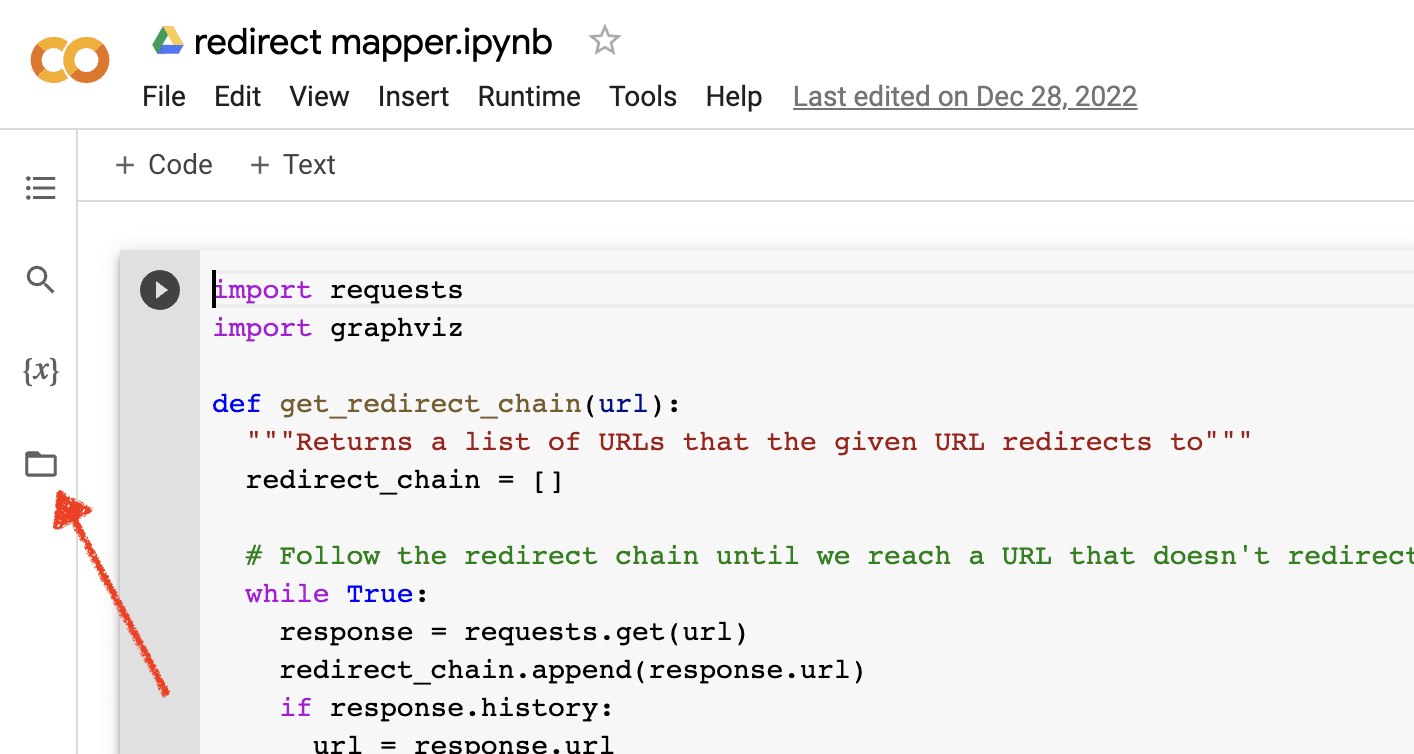

If you’re looking to dip your toes in Python programming, Google Colab is worth considering.

It’s a free, web-based platform that provides a convenient playground for writing and running Python code without needing a complex local setup.

Essentially, it allows you to access Jupyter Notebooks within your browser and provides a host of pre-installed libraries for data science and machine learning.

Plus, it’s built on top of Google Drive, so you can easily save and share your work with others.

To get started, follow these steps:

Enable file uploadsOnce you open Google Colab, you’ll first need to enable the ability to create a temporary file repository. It’s as simple as clicking the folder icon.

This lets you upload temporary files and then download any results files.

Many of our Python scripts require a source file to work. To upload a file, simply click the upload button.

Once you finish the setup, you can start testing the following Python scripts.

Script 1: Automate a redirect mapCreating redirect maps for large sites can be incredibly time-consuming. Finding ways to automate the process can help us save time and focus on other tasks.

How this script worksThis script focuses on analyzing the web content to find closely matching articles.

From here, you can manually review any URLs with a low similarity percentage to find the next closest match.

Get the script

#import libraries

from bs4 import BeautifulSoup, SoupStrainer

from polyfuzz import PolyFuzz

import concurrent.futures

import csv

import pandas as pd

import requests

#import urls

with open("source_urls.txt", "r") as file:

url_list_a = [line.strip() for line in file]

with open("target_urls.txt", "r") as file:

url_list_b = [line.strip() for line in file]

#create a content scraper via bs4

def get_content(url_argument):

page_source = requests.get(url_argument).text

strainer = SoupStrainer('p')

soup = BeautifulSoup(page_source, 'lxml', parse_only=strainer)

paragraph_list = [element.text for element in soup.find_all(strainer)]

content = " ".join(paragraph_list)

return content

#scrape the urls for content

with concurrent.futures.ThreadPoolExecutor() as executor:

content_list_a = list(executor.map(get_content, url_list_a))

content_list_b = list(executor.map(get_content, url_list_b))

content_dictionary = dict(zip(url_list_b, content_list_b))

#get content similarities via polyfuzz

model = PolyFuzz("TF-IDF")

model.match(content_list_a, content_list_b)

data = model.get_matches()

#map similarity data back to urls

def get_key(argument):

for key, value in content_dictionary.items():

if argument == value:

return key

return key

with concurrent.futures.ThreadPoolExecutor() as executor:

result = list(executor.map(get_key, data["To"]))

#create a dataframe for the final results

to_zip = list(zip(url_list_a, result, data["Similarity"]))

df = pd.DataFrame(to_zip)

df.columns = ["From URL", "To URL", "% Identical"]

#export to a spreadsheet

with open("redirect_map.csv", "w", newline="") as file:

columns = ["From URL", "To URL", "% Identical"]

writer = csv.writer(file)

writer.writerow(columns)

for row in to_zip:

writer.writerow(row)

Script 2: Write meta descriptions in bulk

While meta descriptions are not a direct ranking factor, they help us improve our organic click-through rates. Leaving meta descriptions blank increases the chances that Google will create its own.

If your SEO audit shows a large number of URLs missing a meta description, it may be difficult to make time to write all of those by hand, especially for ecommerce websites.

This script is aimed to help you save time by automating that process for you.

How the script works

!pip install sumy

from sumy.parsers.html import HtmlParser

from sumy.nlp.tokenizers import Tokenizer

from sumy.nlp.stemmers import Stemmer

from sumy.utils import get_stop_words

from sumy.summarizers.lsa import LsaSummarizer

import csv

#1) imports a list of URLs from a txt file

with open('urls.txt') as f:

urls = [line.strip() for line in f]

results = []

# 2) analyzes the content on each URL

for url in urls:

parser = HtmlParser.from_url(url, Tokenizer("english"))

stemmer = Stemmer("english")

summarizer = LsaSummarizer(stemmer)

summarizer.stop_words = get_stop_words("english")

description = summarizer(parser.document, 3)

description = " ".join([sentence._text for sentence in description])

if len(description) > 155:

description = description[:152] + '...'

results.append({

'url': url,

'description': description

})

# 4) exports the results to a csv file

with open('results.csv', 'w', newline='') as f:

writer = csv.DictWriter(f, fieldnames=['url','description'])

writer.writeheader()

writer.writerows(results)

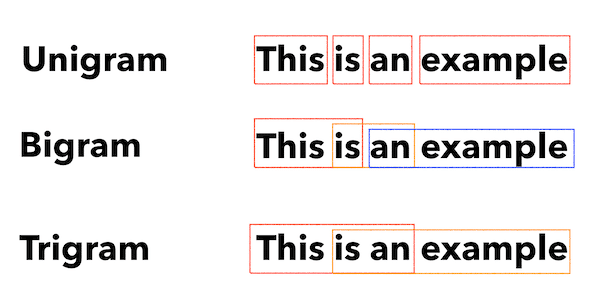

Script 3: Analyze keywords with N-grams

N-grams are not a new concept but are still useful for SEO. They can help us understand themes across large sets of keyword data.

This script outputs results in a TXT file that breaks out the keywords into unigrams, bigrams, and trigrams.

#Import necessary libraries

import re

from collections import Counter

#Open the text file and read its contents into a list of words

with open('keywords.txt', 'r') as f:

words = f.read().split()

#Use a regular expression to remove any non-alphabetic characters from the words

words = [re.sub(r'[^a-zA-Z]', '', word) for word in words]

#Initialize empty dictionaries for storing the unigrams, bigrams, and trigrams

unigrams = {}

bigrams = {}

trigrams = {}

#Iterate through the list of words and count the number of occurrences of each unigram, bigram, and trigram

for i in range(len(words)):

# Unigrams

if words[i] in unigrams:

unigrams[words[i]] += 1

else:

unigrams[words[i]] = 1

# Bigrams

if i < len(words)-1:

bigram = words[i] + ' ' + words[i+1]

if bigram in bigrams:

bigrams[bigram] += 1

else:

bigrams[bigram] = 1

# Trigrams

if i < len(words)-2:

trigram = words[i] + ' ' + words[i+1] + ' ' + words[i+2]

if trigram in trigrams:

trigrams[trigram] += 1

else:

trigrams[trigram] = 1

# Sort the dictionaries by the number of occurrences

sorted_unigrams = sorted(unigrams.items(), key=lambda x: x[1], reverse=True)

sorted_bigrams = sorted(bigrams.items(), key=lambda x: x[1], reverse=True)

sorted_trigrams = sorted(trigrams.items(), key=lambda x: x[1], reverse=True)

# Write the results to a text file

with open('results.txt', 'w') as f:

f.write("Most common unigrams:\n")

for unigram, count in sorted_unigrams[:10]:

f.write(unigram + ": " + str(count) + "\n")

f.write("\nMost common bigrams:\n")

for bigram, count in sorted_bigrams[:10]:

f.write(bigram + ": " + str(count) + "\n")

f.write("\nMost common trigrams:\n")

for trigram, count in sorted_trigrams[:10]:

f.write(trigram + ": " + str(count) + "\n")

Script 4: Group keywords into topic clusters

With new SEO projects, keyword research is always in the early stages. Sometimes we deal with thousands of keywords in a dataset, making grouping challenging.

Python allows us to automatically cluster keywords into similar groups to identify trend trends and complete our keyword mapping.

How this script works

import csv

import numpy as np

from sklearn.cluster import AffinityPropagation

from sklearn.feature_extraction.text import TfidfVectorizer

# Read keywords from text file

with open("keywords.txt", "r") as f:

keywords = f.read().splitlines()

# Create a Tf-idf representation of the keywords

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(keywords)

# Perform Affinity Propagation clustering

af = AffinityPropagation().fit(X)

cluster_centers_indices = af.cluster_centers_indices_

labels = af.labels_

# Get the number of clusters found

n_clusters = len(cluster_centers_indices)

# Write the clusters to a csv file

with open("clusters.csv", "w", newline="") as f:

writer = csv.writer(f)

writer.writerow(["Cluster", "Keyword"])

for i in range(n_clusters):

cluster_keywords = [keywords[j] for j in range(len(labels)) if labels[j] == i]

if cluster_keywords:

for keyword in cluster_keywords:

writer.writerow([i, keyword])

else:

writer.writerow([i, ""])

Script 5: Match keyword list to a list of predefined topics

This is similar to the previous script, except this allows you to match a list of keywords to a predefined set of topics.

This is great for large sets of keywords because it processes them in batches of 1,000 to prevent system crashes.

How this script works

import pandas as pd

import spacy

from spacy.lang.en.stop_words import STOP_WORDS

# Load the Spacy English language model

nlp = spacy.load("en_core_web_sm")

# Define the batch size for keyword analysis

BATCH_SIZE = 1000

# Load the keywords and topics files as Pandas dataframes

keywords_df = pd.read_csv("keywords.txt", header=None, names=["keyword"])

topics_df = pd.read_csv("topics.txt", header=None, names=["topic"])

# Define a function to categorize a keyword based on the closest related topic

def categorize_keyword(keyword):

# Tokenize the keyword

tokens = nlp(keyword.lower())

# Remove stop words and punctuation

tokens = [token.text for token in tokens if not token.is_stop and not token.is_punct]

# Find the topic that has the most token overlaps with the keyword

max_overlap = 0

best_topic = "Other"

for topic in topics_df["topic"]:

topic_tokens = nlp(topic.lower())

topic_tokens = [token.text for token in topic_tokens if not token.is_stop and not token.is_punct]

overlap = len(set(tokens).intersection(set(topic_tokens)))

if overlap > max_overlap:

max_overlap = overlap

best_topic = topic

return best_topic

# Define a function to process a batch of keywords and return the results as a dataframe

def process_keyword_batch(keyword_batch):

results = []

for keyword in keyword_batch:

category = categorize_keyword(keyword)

results.append({"keyword": keyword, "category": category})

return pd.DataFrame(results)

# Initialize an empty dataframe to hold the results

results_df = pd.DataFrame(columns=["keyword", "category"])

# Process the keywords in batches

for i in range(0, len(keywords_df), BATCH_SIZE):

keyword_batch = keywords_df.iloc[i:i+BATCH_SIZE]["keyword"].tolist()

batch_results_df = process_keyword_batch(keyword_batch)

results_df = pd.concat([results_df, batch_results_df])

# Export the results to a CSV file

results_df.to_csv("results.csv", index=False)

Working with Python for SEO

Python is an incredibly powerful and versatile tool for SEO professionals.

Whether you’re a beginner or a seasoned practitioner, the free scripts I’ve shared in this article offer a great starting point for exploring the possibilities of Python in SEO.

With its intuitive syntax and vast array of libraries, Python can help you automate tedious tasks, analyze complex data, and gain new insights into your website’s performance. So why not give it a try?

Good luck, and happy coding!

The post 5 Python scripts for automating SEO tasks appeared first on Search Engine Land.